Head in the clouds

There's still mysteries in the CAN data and things to improve in getting the data out for processing. But, it's a game of diminishing returns and it's time, at least for the time being, to shift the focus to the automation - my ultimate goal is to get automated reports on collected CANEdge data and ideally at least some sort of indicators when the data changes in some way that might require attention.

So, early stages but I've been planning of how to approach the whole automation part. Here's a breakdown of my thoughts so far - by no means is this complete but I kind of know I can get the data processing pipeline to work well enough with reasonable cost this way.

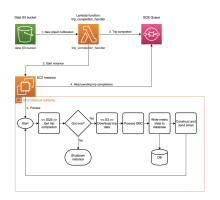

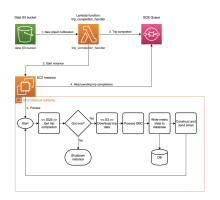

1. Trip completions

1. Trip completions

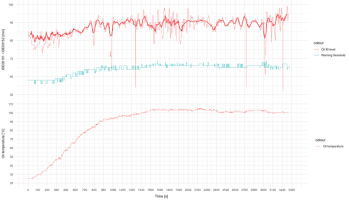

I want to process the data only as complete trips or legs of them, from ignition to shutting down the engine. For this purpose, I've set up CANEdge to split data files on 10M (about 4 minutes engine running) files. This means the CANEdge is transferring data out frequently and not as huge files - and what is stored on the device is usually just a relatively small amount.

The interesting feature of MF4 files and CANEdge is that the files are really split down to exact byte amount. That means each file up to the last on the trip are exactly same size. This forms the basis for trip completion detection - if I see a file that's not exactly the specified split size I know it's the final piece of a trip that has completed.

A slightly annoying feature is that the last segment of any trip is only transferred to Amazon S3 when the next trip begins. This is because power is cut from CANEdge abruptly when engine is cut off. Working around this issue would be possible but would require lot of work with power timers and having some extra logic on the car to get the last file out of CANEdge and transferred to S3 - imho not really worth the effort, at least not right now.

S3 has a feature which enables triggering a Lambda function when new file is created. Amazon Lambda functions are just pieces of code that get executed when something happens - almost like any computer program but there's no permanent storage so anything worth storing has to be stored elsewhere and nothing can be remembered from execution to execution. There's other limits as well, for example Lambda functions can last maximum of 15 minutes. So, any kind of heavy processing is not a good fit for Lambda functions but they are good for gluing several systems together and acting on external events. Also Lambda functions are billed down to a millisecond of run time and cost absolutely nothing when nothing happens. I'm going to use Lambda functions to detect trip completions and triggering the actual trip processing only for complete trips and only when trips are of reasonable length - I would not necessarily want a report every time I just move the car ten meters.

2. Trip completion queue

When a trip completion is detected, Lambda will write a message to something called SQS (Simple Queue Service). This is yet another Amazon service and is targeted for maintaining an ordered list of messages. The advantage of queue is that messages can sit there waiting for processing some weeks in the worst case - if the next step in processing pipeline is not ready to take up the task immediately for some reason the queue will remember all messages that are not yet processed. Hence it separates different processing stages and allows them operate when thy are up for it. Since the trip completions are rare kind of an event, less than 10 per day, the queue will not cost almost anything.

3. Data processing

The heavy lifting of data processing will be done in Amazon EC2. This is more like traditional computer environment in the cloud - you can have any of Linux, Mac or Windows and a persistent disk to which install software on and hold files just like any laptop or desktop. EC2 is billed by the minute it's running, and the disk is billed by all the time it exists storing data - whether in use or not. Since I will have a limited number of trips in any day and I know each trip processing will take less than half an hour EC2 will be quite economical. The computing time itself will cost probably less than 0.10USD per running hour depending how many and how efficient CPU's I will end up using.

The Lambda function will run the EC2 instance after sending trip completion message to the SQS queue. The EC2 instance will be built so that upon boot, it will look into SQS queue and pick up the next message for processing. If none is found, it will just shut itself down. The message will basically be just a name of a folder in S3 - using this the EC2 instance can load all the data files of the trip from the S3 to the local disk for processing. Then the actual processing will be just running the data files through my Python script and DBC file like I have done manually in all the previous posts in this thread - as a result I will get summaries of all signals and possibly CSV files in case I want to automate deep diving into some specific signals more closely.

I will set up a database to the EC2 instance, to which I will store all the summaries of all the signals. This is actually a large part of the reason for selecting EC2 rather than using Amazon native databases and Lambda functions - Amazon databases usually run 24/7 and are quite costly. As I need the database only few times a day it's way more economical to run it only when data processing is taking place, and shut it off with EC2 instance when not needed.

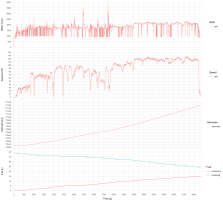

The database will really be the heart of all of my data processing. Obviously it will be quite empty to start with but with time, all the signal summaries pile up and pretty soon I will be able to move on to building some actual trend analysis and KPIs. For example: "What did my DPF differential pressure, on this trip, look like compared to average during last month? Or same month a year ago?"

4. Notifications

Finally, from each trip I want to send or store some kind of a summary. I'm thinking now just a simple email with all of the signal summaries, and maybe some calculated metrics such as estimated fuel consumption or something like that. Anyway, something that let's me know my pipeline is working it's way through and allows for easy glance on the overall state of the collected metrics. Obviously it would be great if it could highlight some anomalies as well... but let's see where this goes.

Whew.

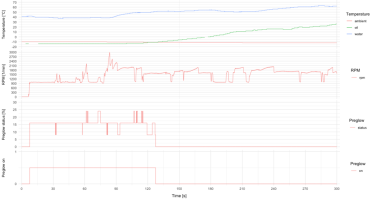

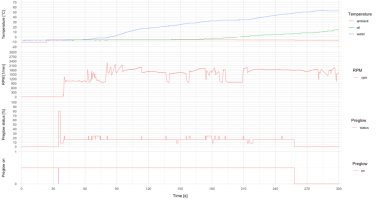

. The status bits can be useful even without knowing the meaning if they can be correlated to some other events.

. The status bits can be useful even without knowing the meaning if they can be correlated to some other events.